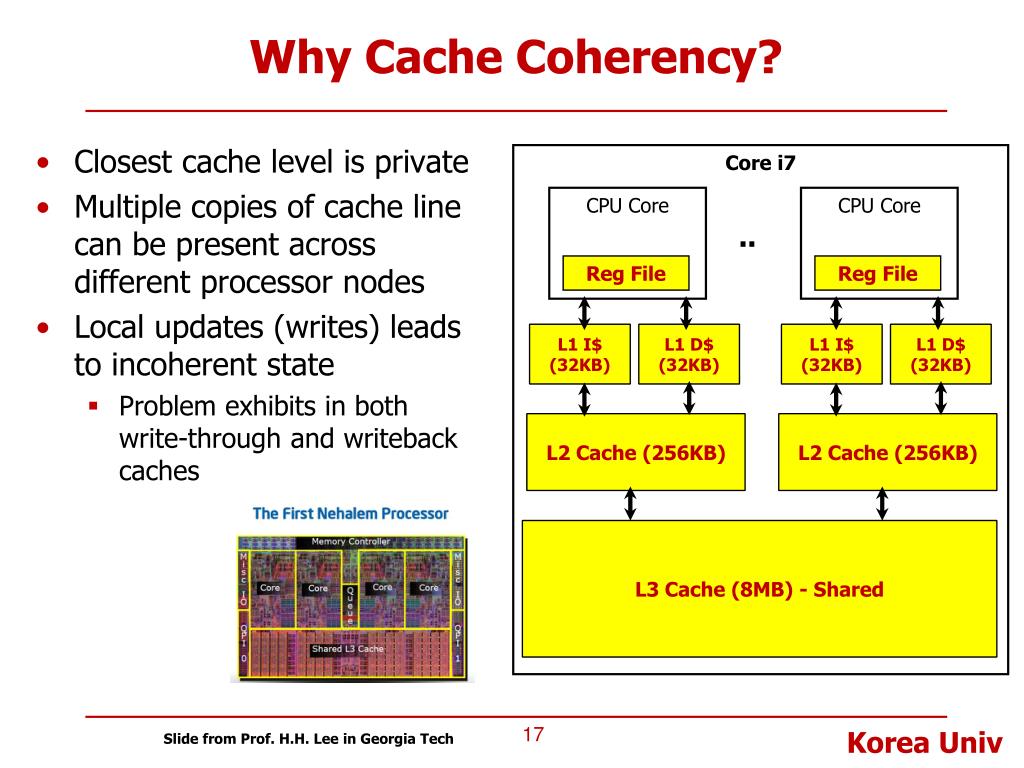

For example you can have a modified line in the L1, while an older copy of it still resides in the L2. To make matters worse, when having multiple cache levels with MESI states, these states don't have to agree. If the L2 (or lowest level private cache in the core) is managing this internal snooping, then eventually it would need to collect all responses and decide on the overall response to send to the shared cache outside. Some store buffers designs may also need to be snooped if they hold data that should be observable. For example x86 with its TSO policy will usually need to snoop also the load-buffer to flush in-flight loads (since at their commit point the data is not guaranteed to be correct anymore). Keep in mind that most out-of-order designs need not only to snoop caches but also certain buffers that hold in-flight operations in case they need to be flushed. Finally, for some upper level caches it may be enough to send a snoop without waiting for a response (for example instruction caches usually can't have modified lines to write back), while others will require an entire response protocol. Alternatively you can start out by snooping all levels in parallel, which may be faster in some cases but more wasteful (in terms of power and cache access slots). If the line is in the upper levels, or if the L2 cache is not guaranteed to be inclusive, the CPU will need to create another snoop to these levels.

an inclusive L2 that has all the data in the L1) can serve as a snoop filter by knowing whether a further snoop is needed to the L1.

Within the core, each cache can behave according to its design - a cache that is inclusive towards its upper levels (e.g. From the SoC / uncore perspective, the core behaves as a single entity, so it can be snooped and will return a single answer (and an updated copy of the line if it needs to).

0 kommentar(er)

0 kommentar(er)